Large Language Models (LLMs) have changed how we interact with technology. They can generate human-like text, assist in creative writing, answer questions, and summarize content at scale. But as the demand for actionable intelligence grows, the next evolution is here- Agentic LLMs

While traditional LLMs work more like responsive assistants, agentic LLMs are proactive collaborators. They go beyond answering questions to planning, acting, and iterating.

In this blog, we’ll explore the core differences between these two paradigms and what it means for businesses, developers, and users.

What Are Traditional LLMs?

Traditional Large Language Models (LLMs) represent the first major wave of generative AI. These models—such as OpenAI’s GPT-3.5, Anthropic’s Claude 2, and Google’s Bard (in passive mode)—are designed to process natural language inputs and generate relevant outputs.

However, their core operating principle is statelessness, meaning they treat each prompt as an isolated event with no memory of past interactions unless memory is explicitly engineered into the application layer.

Key Characteristics

- Prompt-response architecture

Traditional LLMs operate in a single-turn format: the user inputs a prompt, and the model generates a one-time output. There’s no built-in notion of context persistence beyond what is included in the prompt itself. This makes them highly responsive—but reactive, not proactive.

- No persistent memory

These models cannot remember anything from previous sessions or conversations unless context is manually passed along. For example, if you ask a question today and return tomorrow, the model won’t recall your previous interaction. This limits their ability to personalize or build cumulative understanding over time.

- Limited goal orientation

Traditional LLMs are not equipped to autonomously break down high-level goals into sub-tasks. Any sense of “planning” or “strategy” must be simulated by chaining prompts manually. There’s no intrinsic loop of thinking, acting, observing, and refining.

- Tool use through prompt engineering

While traditional LLMs can simulate tool use (like pretending to run code or browse the web), they don’t have native access to external tools unless embedded in a platform that facilitates it (e.g., a plugin environment or API wrapper). Even then, they need explicit prompting to do so.

What Are Agentic LLMs?

Agentic LLMs are the next leap forward. Think of them as AI teammates instead of AI tools. They combine reasoning, memory, planning, and tool use to autonomously pursue goals over time.

Key Characteristics:

- Persistent memory: Store and recall user preferences, task history, or context across sessions.

- Goal-directed behavior: Understand high-level objectives and break them into sub-tasks.

- Iterative execution: Take actions, assess outcomes, and decide next steps—without user intervention.

- Tool integration: Use calculators, databases, APIs, browsers, and more to complete tasks in real time.

Examples in the Wild:

- AutoGPT / BabyAGI: Autonomous agents that build and test software.

- Ema: An AI employee platform that can take ownership of enterprise workflows.

- Devin (by Cognition): A software engineering agent that writes, tests, and debugs code end-to-end.

- GPT Engineer: Automates the software development cycle with iterative design.

Core Differences at a Glance

Feature |

Traditional LLMs |

Agentic LLMs |

Memory |

Stateless |

Persistent (internal or external memory systems) |

Autonomy |

Prompt-driven |

Goal-driven, act with minimal input |

Planning |

User determines every step |

Agent breaks tasks into subtasks independently |

Tool Use |

Simulated or prompt-invoked |

Integrated tool use (APIs, databases, browsers) |

Iteration |

Static one-shot response |

Continuous feedback loop with adaptive actions |

Real-time Interaction |

Reactive |

Proactive and self-guided |

Use Cases |

Chatbots, summarizers |

Agents for CRM, devops, marketing, R&D |

Practical Implications for Enterprises

Agentic LLMs are not just an incremental improvement—they represent a step change in how work gets done. Their ability to reason, plan, and act autonomously brings significant benefits to enterprises, along with important risks that must be managed.

1. Boost in Productivity

Agentic LLMs can take ownership of tasks from start to finish, eliminating the need for constant human prompting. This leads to an exponential increase in productivity across roles and departments.

- Sales & Marketing: An agentic AI can autonomously:

- Research a list of leads from LinkedIn or public databases

- Enrich data using third-party tools or CRMs

- Draft and personalize outreach emails

- Schedule follow-ups based on engagement

- Update the CRM and report insights to the sales lead

- Software Development: Agents like Devin or GPT Engineer can:

- Generate boilerplate code

- Set up repo structures

- Write tests

- Debug errors and even raise pull requests

- Knowledge Work: From legal contract analysis to market research synthesis, agentic systems can run loops to gather, filter, and present information—without the user babysitting the process.

Impact: Human teams shift from doing the work to orchestrating intelligent workflows. This allows more focus on strategic thinking and creativity.

2. Cost and Time Efficiency

By automating repetitive, multi-step processes that would otherwise require human handoffs, agentic LLMs offer significant time and cost savings.

- Customer Support: An AI agent can triage tickets, extract key issues, search knowledge bases, draft responses, and even escalate cases with summaries.

- HR Onboarding: AI can guide new hires through document submission, policy familiarization, IT setup, and orientation—all within a conversational workflow.

- Finance & Ops: Agents can monitor invoices, flag anomalies, and initiate approval workflows.

Example: A traditional LLM might help generate a one-off response to a support question. An agentic LLM, however, can monitor tickets 24/7, learn from recurring issues, and initiate preventative actions (e.g., flagging a broken integration).

Results:

- Fewer human interventions

- Faster task completion

- Round-the-clock operational efficiency

- Reduced dependency on BPO/offshore outsourcing for routine tasks

3. Custom Workflow Integration

Unlike consumer LLMs that exist in isolation, agentic LLMs can be embedded directly into enterprise ecosystems. Through API calls, scripting, and plugin architectures, they can interact with internal tools and follow predefined protocols.

- CRM and ERP: Agents can read/write customer and financial data, generate dashboards, or flag compliance risks.

- Custom Tools: Enterprises can connect agents to proprietary platforms via SDKs or secured APIs.

- Security and Policy Enforcement: Agents can be configured to follow enterprise-grade rules (e.g., PII redaction, audit logging, permission checks).

Use Case: An AI project coordinator can interact with Jira, Notion, GitHub, and Slack—checking deadlines, nudging contributors, and updating status without human prodding.

Benefit: This creates a seamless AI-human hybrid workflow—where agents handle coordination and data manipulation, while humans focus on decisions and oversight.

4. Risks Multiply With Autonomy

The power of autonomous agents comes with increased responsibility and risk. Giving LLMs the ability to act on real-world systems introduces failure points that must be proactively mitigated.

Key Risk Areas:

- Hallucinations with Impact: A harmless error in a chatbot becomes dangerous when an agent books a meeting with the wrong client, alters critical data, or sends incorrect information externally.

- Misaligned Objectives: If the agent’s goal isn’t aligned with the business intent, it might optimize for the wrong outcome—like over-emailing leads or misclassifying documents.

- Security Vulnerabilities: Agents with access to APIs, filesystems, or databases need strict guardrails to prevent data leaks, unauthorized actions, or system compromise.

- Loss of Oversight: Complex agentic systems operating across time and tools can become black boxes unless designed with observability and logging in mind.

Real Risk: Imagine a finance bot accidentally wiring funds due to misinterpreted intent—or a customer support agent escalating confidential user information to the wrong team.

Choosing the Right Model for the Job

As enterprises adopt LLMs into their workflows, it’s critical to match the right model architecture to the nature of the task. Not every problem requires full autonomy—and in many cases, overengineering can introduce unnecessary risk and complexity.

Here’s a practical decision framework to help guide your deployment strategy:

Use Traditional LLMs When:

Traditional LLMs (e.g., GPT-3.5, Claude Instant, Gemini in chat mode) excel at stateless, prompt-response tasks where the environment is simple and user-driven.

Ideal For:

- Quick, one-off tasks:

- Generating a blog title

- Summarizing a news article

- Translating a sentence

- Tasks with no memory requirements:

- Rewriting content for tone or clarity

- Creating simple code snippets or templates

- Static or repetitive environments:

- FAQ bots that don’t require real-time updates

- Basic email assistants

- Human-guided workflows:

- When users want full control over each step

- Great for ideation, drafting, and light editing

Example: A marketing analyst asking, “Give me 5 email subject lines for a new product launch.”

The LLM returns suggestions in one go, with no need for planning or memory.

Use Agentic LLMs When:

Agentic models are designed for autonomous, context-rich, and multi-step operations. These systems shine when the goal is to delegate not just thinking, but doing.

Ideal For:

- Complex, multi-stage workflows:

- A sales agent that researches leads, drafts outreach, and updates CRM records

- An AI ops bot that diagnoses issues and files bug reports or escalates incidents

- Hands-free execution:

- Scheduling meetings across calendars

- Running recurring reports or syncing data from different tools

- Tasks that require live data, reasoning, and adaptation:

- Analyzing competitor pricing across sources and making recommendations

- Continuously updating documentation based on internal product changes

- Enterprise process automation:

- Ticket triage and resolution in IT support

- Automated onboarding workflows in HR or legal

Example: An agentic research assistant that continuously scans specified websites, extracts relevant updates, summarizes them, and notifies the right stakeholders every morning—without being re-prompted.

Use a Hybrid Model When:

Hybrid approaches combine the efficiency of autonomy with the oversight and precision of human intervention. This is often the most practical and safest route in regulated or mission-critical domains.

Ideal For:

- Human-in-the-loop workflows:

- Drafting medical documentation where a clinician provides final sign-off

- Legal document review, where the agent flags risks but the lawyer decides next steps

- Blended memory needs:

- Some workflows require context retention (e.g., multi-turn HR case resolution), while others don’t (e.g., policy FAQs)

- Risk-sensitive environments:

- Finance, insurance, government, and healthcare—where decisions must be auditable, explainable, and reversible

Example: In customer service, a hybrid agent handles ticket categorization and drafts responses, but a human reviews high-priority or sensitive queries before sending.

Future Outlook: From Tools to True Collaborators

The trajectory of large language models is unmistakable—they are evolving from passive assistants into proactive collaborators. Agentic LLMs represent the next frontier, where AI systems not only understand instructions but take initiative, adapt over time, and work across systems to deliver outcomes.

Here are some of the major developments on the horizon:

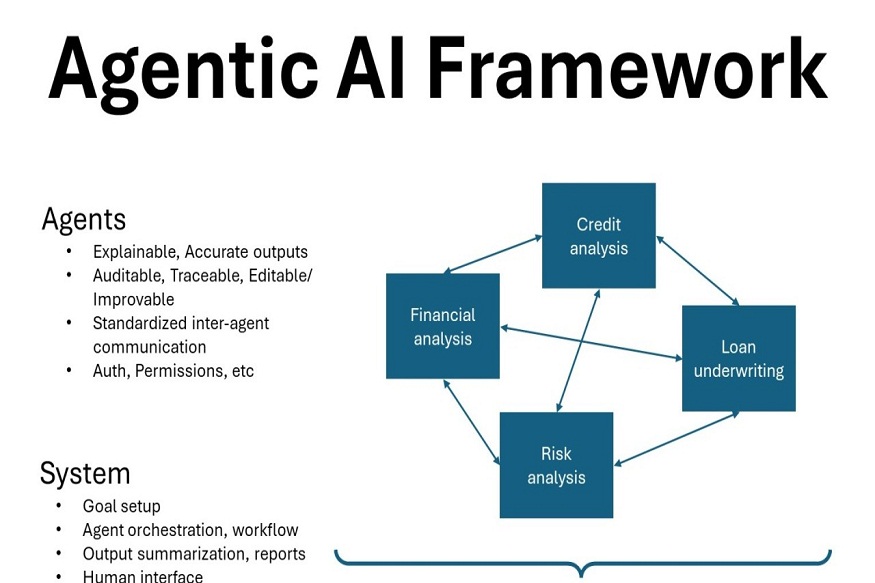

Multi-Agent Systems

Instead of relying on a single general-purpose agent, the future lies in orchestrated networks of specialized agents. Each agent can be assigned a specific responsibility—whether it’s research, communication, coordination, or monitoring—and they can collaborate to solve complex problems.

For example, in a product development workflow, one agent might handle competitor research, another might draft product documentation, while a third manages cross-functional communication. This kind of division of labor mimics human teams and offers scalability without losing precision.

Continual Learning

Today’s LLMs operate within fixed knowledge boundaries. Agentic models of the future will be designed to learn incrementally from their environment, adapting to new data, user feedback, and evolving business needs. However, unlike traditional machine learning pipelines, this learning will be governed by strict oversight to ensure compliance and reliability.

For enterprises, this means agents can improve over time without requiring manual retraining, making them more responsive and aligned with organizational goals.

Standardized APIs and Interoperability

As more organizations build internal agent-based systems, the need for standard communication protocols becomes critical. APIs that allow agents to interact consistently with enterprise software—from CRMs to workflow automation platforms—will streamline integration and deployment.

Emerging frameworks are beginning to offer these standards, allowing organizations to avoid vendor lock-in and build composable agent ecosystems.

Edge and On-Prem Deployments

For industries with strict data privacy or latency requirements, agentic systems need to run close to the source of data. Edge computing will allow agents to operate on local devices or private servers, enabling real-time decision-making while ensuring data stays within regulatory boundaries.

Use cases include on-premises AI agents for hospitals, retail environments, or secure financial institutions.

Observability and Self-Diagnosis

Next-generation agentic systems will include built-in observability features—allowing enterprises to track how decisions are made, flag unusual behavior, and audit the system over time. Some agents may even self-identify failures or limitations and escalate to humans when necessary.

This level of transparency will be crucial for enterprise adoption, particularly in regulated industries where accountability is non-negotiable.

Conclusion

Agentic LLMs mark a fundamental change in how we think about AI. Where traditional LLMs act as reactive tools—waiting for instructions and producing static outputs—agentic LLMs are proactive, adaptable, and capable of autonomous execution.

They bring together capabilities such as planning, memory, communication, and tool use into a cohesive, goal-oriented system. As a result, they are well suited to transform knowledge work, automate complex workflows, and augment human teams in ways that were previously impractical.

For enterprises, the implications are substantial. Understanding the difference between passive and agentic systems—and how to architect, deploy, and govern them—will become a key differentiator in digital transformation efforts.

The question is no longer, “What can an LLM do?” but rather, “What can a capable AI teammate help us achieve?”

As organizations begin to reimagine their processes around these new capabilities, those that invest thoughtfully in agentic systems will gain a significant advantage—unlocking higher efficiency, greater innovation, and more adaptive operations across the board.